AIV Buddy Configuration

The AIV Dashboard leverages advanced AI capabilities through a suite of intelligent assistants (AI Buddies) designed to simplify your data analysis and visualization tasks. This guide will take you step-by-step through the configuration process, ensuring you can fully harness the power of the AI-driven tools.

The AIV Dashboard offers ten intelligent AI Buddies to enhance your data analysis and visualization experience:

- AIV Dashboard Buddy

- AIV Custom Visualization Buddy

- AIV Global Filter Buddy

- AIV SQL Buddy

- AIV Analyzer Buddy (Beta)

- AIV Forecast Buddy

- AIV Prediction Buddy

- AIV Analysis Buddy

- AIV Custom Column Buddy

- AIV Insight Buddy

Prerequisites

Required Credentials

Before setting up AI Buddies for optimal performance, ensure you have the following credentials prepared:

Kernel IP: The address (and port) of the server or system (referred to as the “kernel”) where the AI model or service is hosted. It is used to establish communication between the AI system and the application requesting AI services. An example Kernel IP could be http://aiv-ai:8001, where aiv-ai is the domain name or hostname of the server and 8001 is the port through which the server communicates with the AI system.

AI Token: Refers to the maximum number of tokens allowed for processing in a single interaction with the AI model. Exceeding this limit may lead to truncated input, incomplete responses, or error. Tokens are units of text — ranging from characters to whole words — used internally by the model to understand and generate language.

- Usage Example: AI Buddies can be configured with 3,000 tokens when using GPT-3.5 Turbo, ensuring efficient and safe execution within the model’s constraints.

AI Engine: Specific AI model or engine configuration for processing requests. For example, gpt-3.5-turbo or deepseek-chat.

AI Key: A unique API key provided by an AI service (e.g., OpenAI) that authenticates and authorizes access to the model. This key is required to integrate and interact with AI’s models. Example: sk-**********************************

To enable and configure these AI Buddies, follow these steps:

-

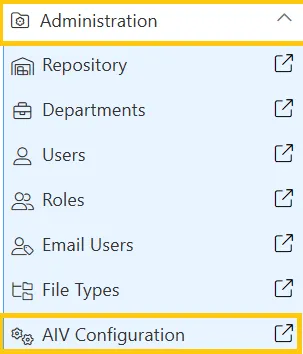

Go to the Administration tab and click on AIV Configuration. This will redirect you to the AIV configuration page.

-

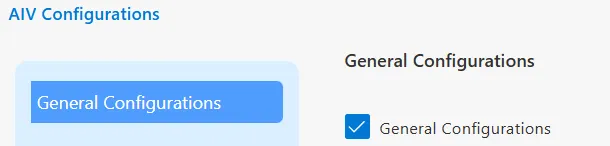

On the AIV Configuration page, click on General Configurations and check the General Configurations checkbox as shown below:

-

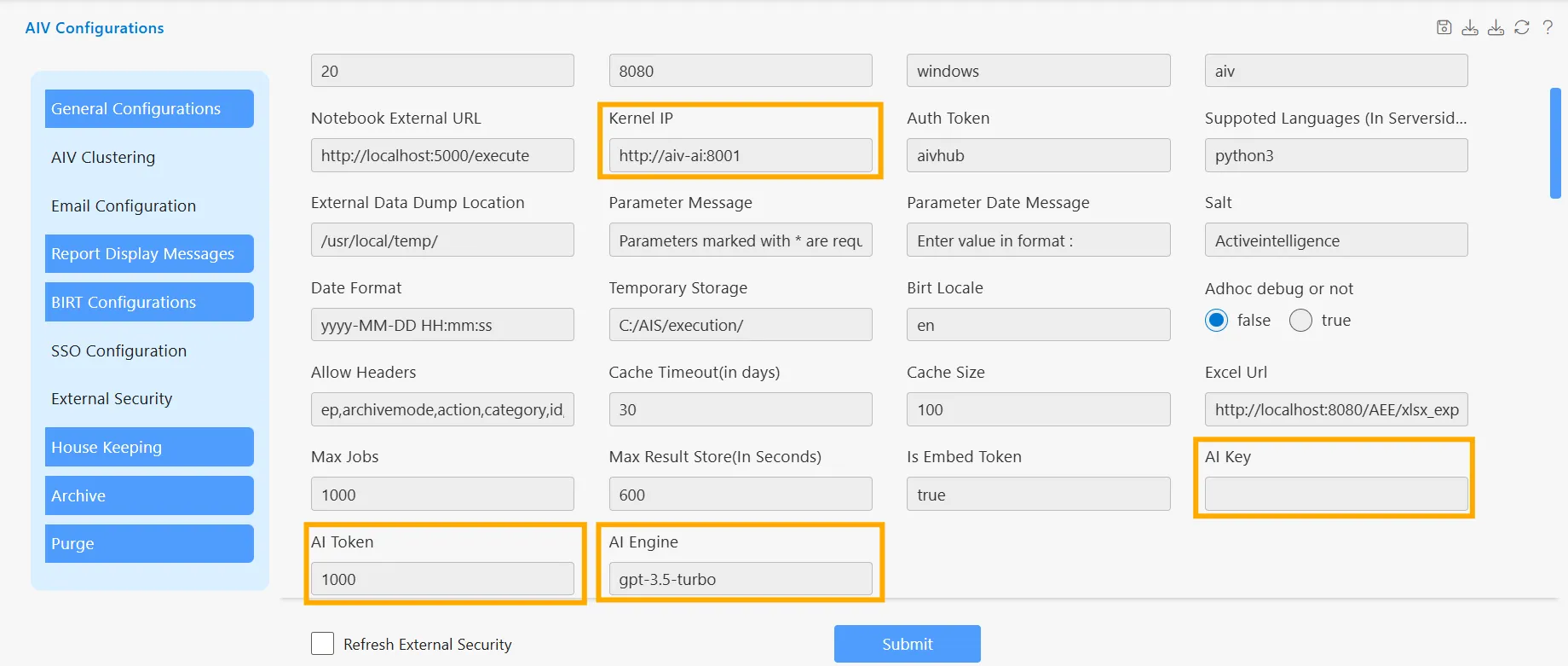

In the General Configuration section, ensure that the following fields are filled out:

-

Kernel IP: Enter the Kernel IP endpoint URL(http://aiv-ai:8001).

-

AI Key: Input your AI key.

-

AI Token: Enter the token limit value.

-

AI Engine: Specify the AI Engine to be used (e.g., gpt-3.5-turbo, gpt-4, etc.).

-

After filling in the fields, click on the Submit button. A confirmation message, Properties Update Successfully, will appear to indicate that the configuration has been saved.

By completing these steps, your AI Buddies will be ready for use, enhancing your dashboard experience with advanced AI-driven capabilities.

Aditional Guidance

-

Connecting via API key

-

Obtaining API Keys:

To use the OpenAI and DeepSeek APIs, you must first create accounts on their respective platforms.

For OpenAI, visit https://platform.openai.com/signup to sign up or log in. Once logged in, navigate to https://platform.openai.com/account/api-keys and click “Create new secret key” to generate your API key. Make sure to copy and store the key securely, as it will not be shown again.

For DeepSeek, go to https://platform.deepseek.com and sign up or log in. Then navigate to https://platform.deepseek.com/keys and click “Create API Key”. Copy the generated key and keep it safe.

-

-

Local Ollama Integration with Docker:

The AIV Dashboard supports running models locally using Ollama, offering a secure, cost-effective, and offline inference solution. With Docker, you can seamlessly run Ollama inside a container and pull models directly into it.

Steps to Integrate Ollama Locally:

I. Install Ollama in Docker: Add Ollama installation to Docker Compose setup by using the official Ollama base image.

II. Pull the Desired Model Inside Docker: Once inside the container (or through a startup script), pull the required model using the following command: ollama pull deepseek-coder:6.7b-instruct-q4_K_M

III. Run the Ollama Model: Launch the model server inside Docker: ollama run deepseek-coder:6.7b-instruct-q4_K_M

IV. Use the Locally Hosted Model: Update AIV Configuration settings by providing the AI key as “ollama” and the model as “deepseek-coder:6.7b-instruct-q4_K_M” or any model that is compatible .